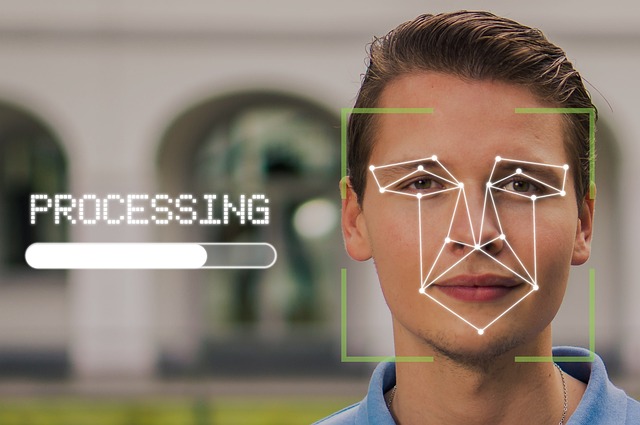

Face recognition is a biometric technology that employs facial characteristics to identify or authenticate individuals. This technology falls under the categories of computer vision and artificial intelligence and has found extensive applications in fields such as security, healthcare, and entertainment. By examining distinctive features of a person’s face, including the spacing of the eyes, the configuration of the jawline, and the shape of the nose, face recognition systems are capable of delivering precise and effective identification.

The technology operates through multiple phases, which encompass image acquisition, feature extraction, and subsequent comparison with a database of recognized faces. Contemporary face recognition systems frequently utilize machine learning algorithms, especially deep learning techniques, to attain a high level of accuracy, even in difficult circumstances such as inadequate lighting, obstructions, or variations in facial expressions.

In this article let’s focus on building a simple face recognition program/tool by using Open Face library.

In facial recognition, there are two main modes.

- face verification: recognising a given face by mapping it one-on-one against a known identity.

- face identification: A one-to-many mapping for a given face against a database of known faces.

Open Face library:

OpenFace is a Python and Torch implementation of face recognition with deep neural networks and is based on the CVPR 2015 paper FaceNet: A Unified Embedding for Face Recognition and Clustering by Florian Schroff, Dmitry Kalenichenko, and James Philbin at Google. (Source: openFace)

OpenFace, despite being relatively new, has gained significant traction due to its accuracy levels that are comparable to those of advanced facial recognition models utilized in leading private systems, including Google’s FaceNet and Facebook’s DeepFace.

OpenFace employs Google’s FaceNet architecture for the purpose of feature extraction and utilizes a triplet loss function to evaluate the accuracy of the neural network in classifying faces. This process involves training on three distinct images: the first is a known face image referred to as the anchor image, the second is another image of the same individual that possesses positive embeddings, and the third is an image of a different person, which contains negative embeddings.

TO build a simple face recognition model using open face, we must complete following steps.

- build a data set

- Perform face detection

- Extract face embedding

- Perform training

- face recognition

The process is divided into two parts.

1. Face Detection:

face detection is an important part of this process. First, we must detect faces in a given image before recognising them. Prior to executing any data operations, the algorithm must initially undergo training with both positive images (those containing a face) and negative images (those devoid of a face). Upon successful completion of the training phase, features are extracted from the images. Subsequently, the dimensions of the target image are determined, typically being smaller than those of the training images. This target image is then utilised to compute the average pixel values within each section. If the average threshold values are met, a match is deemed to have occurred. A single classifier may not provide sufficient accuracy; therefore, multiple classifiers are employed to enhance the training of the images.

The classifier is enhanced through the integration of multiple other classifiers. AdaBoost is a machine learning algorithm that identifies the most appropriate match for the target image by conducting tests on selected images from various classifiers. To improve outcomes, it may even reverse the process.

For visual recognition tasks, a combination of rectangles is employed. These rectangles do not qualify as true Haar wavelets; therefore, they can be referred to as Haar features, as illustrated in the figure below. By calculating the difference between the average values of the dark and light regions, we can determine the presence of a Haar feature. A Haar feature is deemed present if the difference exceeds a specified threshold.

2. Face Recognition:

After finishing the detection process, now we have to identify the face (recognize). Facial recognition technology is designed to identify or verify an individual based on images or videos captured by digital cameras. This process involves the extraction, cropping, resizing, and often conversion to grayscale of facial images. The facial recognition algorithm then analyses these images to identify the features that most accurately represent the individual.

Face recognition technology is frequently employed in various applications such as smartphone authentication, surveillance systems, border security, and enhancing personalized customer interactions. Nevertheless, it brings forth significant ethical dilemmas, particularly regarding privacy, bias, and potential data misuse. It is essential to tackle these issues to guarantee the responsible implementation of face recognition technology.

references:

https://medium.com/@vrushabhkangale/face-recognition-system-using-open-face-480d581986b

https://blog.algorithmia.com/understanding-facial-recognition-openface/

https://www.cv-foundation.org/openaccess/content_cvpr_2015/app/1A_089.pdf